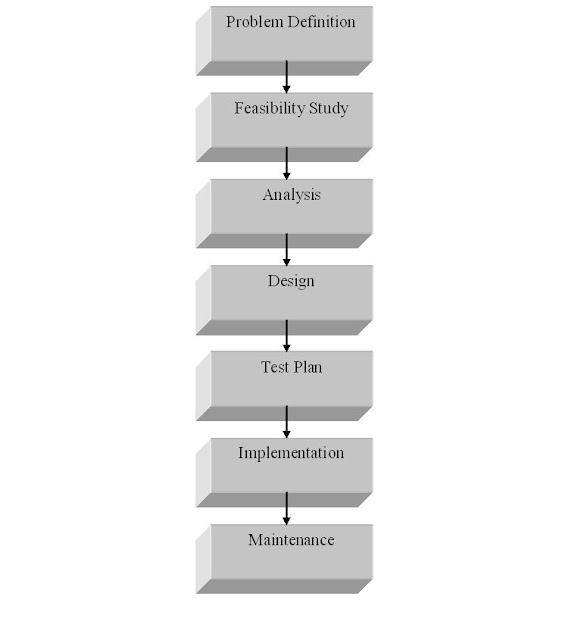

SOFTWARE DEVELOPMENT LIFE CYCLE

OVERVIEW

The need to plan for and develop safe, secure, and reliable system solutions is heightened by an increasing dependence on technology to provide services, develop products, administer programs, and perform management functions. There is also a need to ensure privacy and security when developing information systems, to establish uniform privacy and protection practices, and to develop acceptable implementation strategies for these practices.

The System Development Life Cycle (SDLC) methodology is designed to satisfy these needs by establishing procedures, and practices governing the initiation, definition, design, development, deployment, operation, maintenance, enhancement, and eventual retirement of automated information systems

PURPOSE

The purpose of a Systems Development Life Cycle methodology is to provide IT Project Managers with the tools to help ensure successful implementation of systems that satisfy Agency strategic and business objectives. The documentation provides a mechanism to ensure that executive leadership, functional managers and users sign-off on the requirements and implementation of the system. The process provides Agency managers and the Project Manager with the visibility of design, development, and implementation status needed to ensure delivery on-time and within budget.

GOALS

The goals of this SDLC approach are to:

• Deliver quality systems which meet or exceed customer expectations when promised and within cost estimates.

• Provide a framework for developing quality systems using an identifiable, measurable, and repeatable process.

• Establish a project management structure to ensure that each system development project is effectively managed throughout its life cycle.

• Identify and assign the roles and responsibilities of all involved parties, including

functional and technical managers, throughout the system development life cycle.

• Ensure that system development requirements are well defined and subsequently satisfied.

OBJECTIVES

The Systems Development Life Cycle methodology will help to achieve these goals by:

• Establishing appropriate levels of management authority to provide timely direction, coordination, control, review, and approval of the system

development project.

development project.

• Ensuring project management accountability.

• Documenting requirements and maintaining trace ability of those requirements

throughout the development and implementation process.

• Ensuring that projects are developed within the current and planned information technology infrastructure.

• Identifying project risks early and manage them before they become problems.

SOFTWARE DEVELOPMENT LIFE CYCLE

THE SDLC PHASES

DETAILS:-

· The feasibility analysis is conducted to know whether the development of this project is actually required. It is done to ensure that the development of the candidate system is a feasible solution to the prevailing problem.

· The requirement analysis is done to enlist what all is required out of the project, what would be the vital inputs and what outputs are expected out of the system. The enumeration of the user requirements is also done in this phase.

· The design phase includes the designing of the project frame, the basic structure of the project and the actual coding of the software system.

· The testing is the major quality control measure used during software development. Its basic function is to detect errors in the software. It uncovers the errors in the software coding as well as the errors introduced during the earlier phases.

SYSTEM ANALYSIS

NEED

The need of this project was felt due to the following problems being faced in the current system-

1) The Application had to be installed manually every time it had to be used

Users have to manually make the reports for various reasons.

2) The application was static thus no new additions could be done making it a limit based project.

3) The evaluation of the rating was manual.

4) There was no security. Anybody could access others applications.

5) A lot of time was being spent on various evaluations as those had to be done manually leading to wastage of precious time of the company.

6) Any addition that had to be made had to be communicated to every auditor separately taking a lot of efforts time.

Requirements Analysis

This phase formally defines the detailed functional user requirements using high-level requirements identified in the Initiation, System Concept, and Planning phases. It also delineates the requirements in terms of data, system performance, security, and maintainability requirements for the system. The requirements are defined in this phase to a level of detail sufficient for systems design to proceed. They need to be measurable, testable, and relate to the business need or opportunity identified in the Initiation Phase. The requirements that will be used to determine acceptance of the system are captured in the Test and Evaluation Master Plan.

The purposes of this phase are to:

· Further define and refine the functional and data requirements and document them in the Requirements Document.

· Complete business process reengineering of the functions to be supported, e.g., verify what information drives the business process, what information is generated,

· Who generates it, where does the information go, and who processes it? Developed data and process models including system inputs and outputs.

Develop the test and evaluation requirements that will be used to determine acceptable system performance

DETAILS

The customer in this phase was personally interviewed so that he is well familiar with what was expected from him and in turn what did he expect. The detailed requirements

Of the project by the customer are:

1 The project should be multi-user.

2 The project should be completely secure.

3 There should be no wastage of time and efficiency of the workers.

4 All the evaluations required for the project should be done without any efforts being made by the auditors or any on else.

All these requirements were gathered from the customer on the basis of which various Data Flow Diagrams (DFD) were constructed along with the flowcharts which describe the project path in complete detail.

DESIGN PHASE

The most creative and challenging phase of the system life cycle is system design. The term design describes a final system and the process by which it is developed. It refers to the technical specifications that will be implied in implementing the candidate system .It also includes the construction of the programs and program testing.

1 The first step is to determine how the output is to be produced and in what format.

2 The second step is to design the input data and the master files to meet the user requirements. The final report also includes the procedural flowcharts, record layouts, data flow diagrams. This phase should be close to the actual proposed design by the customer. In short the design phase is a transition from a user oriented document to a document oriented programmers or data baser personnel.

Analysis Model:

The analysis model must achieve three primary objectives:

1. To describe what the customer requires.

2. To establish the basis for the creation of a software design.

3. To define a set of requirements that can be validated once the software is built.

IMPLEMENTATION PHASE

The implementation phase involves installing approved applications into production environments. Primary tasks include announcing the implementation schedule, training end users, and installing the product. Additionally, organizations should input and verify data, configure and test system and security parameters, and conduct post-implementation reviews. Management should circulate implementation schedules to all affected parties and should notify users of any implementation responsibilities.

After organizations install a product, pre-existing data is manually input or electronically transferred to a new system. Verifying the accuracy of the input data and security configurations is a critical part of the implementation process. Organizations often run a new system in parallel with an old system until they verify the accuracy and reliability of the new system. Employees should document any programming, procedural, or configuration changes made during the verification process.

Details

The implementation of the project required to do the following:

· The first and foremost requirement is JDK 1.5 version

· The hardware requirements are as follows-Apache tomcat5.0 is required to be installed on the remote server.

· To configure the port 8080 with tomcat5.0.

· Under the webapps directory our own directory structure is to be created. The JSP files and the .class files are inserted in the directory.

· Web .xml files are required to deploy the project.

Note: Tomcat5.0 is required to be in the running stage at every time so as to use the application. If at any moment tomcat 5.0 stops the application would also affecting the work of the users leading to losses of the company.

User Training

The user was given an exhaustive training to train him how to handle the application according to his user rights. Regular training sessions were conducted after the application was implemented so that maximum uses would be given personnel attention. Any doubts of the user were tried to clear up to his satisfaction.

The users were made to operate to application on their own a number of times before they actually started using the application so that they get well used to the environment under which they would be working thereon which would result in saving precious time of the company as the user would not spend any extra time in learning about the use of the application after it has been implemented for use in their official work.

TESTING AND OPTIMIZATION

Testing:-the hardest task

You can write a program in an hour that can have 50 million possible combinations to test. The labor involved can cost fortunes. Therefore testing is often the first thing to slip when resources stretch - but it's the entire organization that suffers when a defect causes problems. Lost revenue or reputation is probably the least harmful result of defects. Measurements show that a defect discovered during design that costs $1 to rectify will cost $1,000 to repair in production. This is a tremendous cost differential and clearly points out the advantage of early error detection.

Testing vs. Quality Assurance

Quality Assurance is the process of making sure that the customers gets enough of what they pay for to satisfy their needs. Testing is the means by which we perform the process. You can test without assuring quality, but you can't assure quality without testing. A common problem with software quality is that the assurance is in the hands of the producers. While the producers can certainly create and perform insightful, powerful tests, it is perfectly possible to design tests that will churn away forever and never discover a defect. This is the psychological temptation when the software producers program tests to evaluate them.

Testing Levels

Software testing is usually done at several levels. They are commonly referred to as unit testing, integration testing, and system testing.

Test Phases

There is several type of testing in a comprehensive software test process, many of which occur simultaneously.

Unit Test

The objective of unit testing is to verify that individual units -- the smallest compliable components, such as functions & modules-- function correctly. This is done to ensure that no bugs are present in the very basic structure of the program.

Unit testing is also called component testing. However, component testing is sometimes considered to be a level of testing above unit testing. This may be the case with a system that contains individually testable components that are composed of multiple units. For example in the visual basic interface, in a system that contains forms and modules, the functions could be tested as units and the whole form could be tested as component.

Unit testing tests software in terms of a unit: a module, a function, a specific section of code. This testing occurs while the software is being developed and before all the pieces of code complete.

My Project Network Management software each function and event has been tested individually.

Integration Testing

The objective of integration testing is to test the integration of and communication between components. Additionally, it may include testing the integration of subsystems or communication with external systems

On some projects, integration testing may be divided into two levels: Assembly Testing and System Integration Testing.

During Assembly testing, the integration of the software components is tested.

During system Integration testing, the communication with external systems is tested.

In Integration testing, individual modules are put together making a larger application. This can be done repeatedly until the program is assembled in its entirety.

The individual components are combined with other components to make sure that necessary communications, links and data sharing occur properly. It is not truly system testing because the components are not implemented in the operating environment. The integration phase requires more planning and some reasonable sub-set of production-type data. Larger systems often require several integration steps.

There are three basic integration test methods:

· All-at-once

· Bottom-up

· Top-down

The all-at-once method provides a useful solution for simple integration problems, involving a small program possibly using a few previously tested modules.

Bottom-up testing involves individual testing of each module using a driver routine that calls the module and provides it with needed resources. Bottom-up testing is a more intuitive approach to testing that also usually finds errors in critical routines earlier than the top-down method. However, in a new system many modules must be integrated to produce system-level behavior, thus interface errors surface late in the process.

Top-down testing fits a prototyping environment that establishes an initial skeleton that fills individual modules are completed. The method lends itself to more structured organizations that plan out the entire test process. Although interface errors are found earlier, errors in critical low-level modules can be found later than you would like.

What all this implies is that a combination of low-level bottom-up testing works best for critical modules, while high-level top-down modules provide an early working program that can give management and users more confidence in results early on in the process. There may be need for more than one set of integration environments to support this hybrid approach.

System Testing

The objectives of system testing are to find defects that are attributable to the behavior of the system as a whole, rather than the behavior of individual components, and to test that the software functions as a complete system. This level of testing is different from integration testing in that the tests are concerned with the entire system, not just the interactions between components. Other than system functionality and behavior, system testing may include testing configuration, throughput, security, resource utilization, and performance.

Test Design

Just as the components of a system must be designed, tests for a system must be designed. Two common methods of test design are black box testing and white box testing. After the tests are implemented, though, the test design method may not be evident.

Black Box

In black box testing the tester knows nothing at all about the inside of the system he or she is testing. The system is tested by throwing inputs at it like throwing pennies down a well and waiting for a splash.

Black box testing focuses on designing tests that view components as opaque. The implementation details of the components are not known (or, at least, are ignored), and only externally observable behavior or functionality is tested. Other terms for black box testing are functional testing and behavioral testing.

However, behavioral testing and black box testing are slightly different. Even though both view components as black boxes, behavioral testing allows knowledge of the implementation details of components to be used in test design if necessary.

You can re-run black box tests after a change to make sure the change only produced intended results with no inadvertent effects.

White Box

In white box testing (also called glass box), the tester has knowledge of the internals of the system. Software developers on a project are best able to do this kind of testing, since they know which areas may be weak.

White box testing focuses on designing tests that view components as transparent. The implementation details of the components are known, and that knowledge is used in test design and creation of test data. Other terms for white box testing are structural testing and clear box testing.

Testing Techniques

There are many techniques that can be used to test software. Some are better than others, and some can be used in conjunction with others to get better test coverage. Here is a summary of some common testing techniques:

Manual testing

Tests are done by a human with test data that may be predetermined but may also be determined per test. In some cases, manual testing could be characterized as "banging away" at the software.

Automated Testing

Tests can be run by a tool or an unattended process, such as a nightly build, and they can be re-run many times. Test data is predetermined or generated.

Regression Testing

Tests, usually automated, are run to determine if modifications or enhancements have negatively affected functionality that passed previous testing.

Stress Testing

Tests are run to determine if the software can withstand an unreasonable load with insufficient resources or extreme usage.

Load Testing

Tests are run to determine if the software can handle a typical or maximum load or to determine the maximum sustainable load.

Performance Testing

Tests are run to determine actual performance as compared to predicted performance.

System Test

The system test phase begins once modules are integrated enough to perform tests in a whole system environment. System testing can occur in parallel with integration test, especially with the top-down method.

Performance / Stress Test

An important phase of the system test, often called load, volume or performance test, stress tests try to determine the failure point of a system under extreme pressure. Stress tests are most useful when systems are being scaled up to larger environments or being implemented for the first time. Web sites, like any other large-scale system that requires multiple access and processing, contain vulnerable nodes that should be tested before deployment. Unfortunately, most stress testing can only simulate loads on various points of the system and cannot truly stress the entire network as the users would experience it. Fortunately, once stress and load factors have been successfully overcome, it is only necessary to stress test again if major changes take place.

A drawback of performance testing is that can easily confirm that the system can handle heavy loads, but cannot so easily determine if the system is producing the correct information. In other words, processing incorrect transactions at high speed can cause

Much more damage and liability than simply stopping or slowing the processing of correct transactions.

Regression Test

Regression tests confirm that implementation of changes have not adversely affected other functions. Regression testing is a type of test as opposed to a phase in testing. Regression tests apply at all phases whenever a change is made.

Quality Assurance Test

Some organizations maintain a Quality Group that provides a different point of view, uses a different set of tests, and applies the tests in a different, more complete test environment. The group might look to see that organization standards have been followed in the specification, coding and documentation of the software. They might check to see that the original requirement is documented, verify that the software properly implements the required functions, and see that everything is ready for the users to take a crack at it.

User Acceptance Test and Installation Test

Traditionally, this is where the users ‘get their first crack’ at the software. Unfortunately, by this time, it's usually too late. If the users have not seen prototypes, been involved with the design, and understood the evolution of the system, they are inevitably going to be unhappy with the result. If you can perform every test as user acceptance tests, you have a much better chance of a successful project.

Repair

The whole point of all quality processing is to prevent defects as much as possible, and to discover those defects that do occur before the customer does. The repaired code should start the testing process all over again.

Destructive vs. validation testing

Validation tests are positive tests. They confirm that the software meets requirements - that an input or set of inputs give the desired output.

Destructive tests try to determine if the software does things it shouldn't do. The ratio of destructive tests to validation tests in a mature test suite should be about 4:1.

Reproducibility

Reproducibility is critical for good functional testing. You must ensure that the same input drives the same output. This means that the test environment cannot change in unknown ways or the integrity of the test will be compromised.

Risk analysis

New circle addition

System crash

Network flaws (busy or crashed)

Careless data entry

Platform specific (windows compatible)

CODE OPTIMIZATION

· The required changes are being brought about according to user’s requirements.

· The modularity is being enforced wherever possible (if not already present).

Comments